Dear readers,

To those who already support Scientists & Poets as paid subscribers — thank you. Your generosity keeps this work independent, deeply researched, and alive. For those reading freely, I hope you’ll consider joining them. Paid subscriptions make space for long-form writing that links science, art, and meaning — and include access to our Book Group on October 23, where we’ll be reading Meghan O’Gieblyn’s God, Human, Animal, Machine, a brilliant meditation on consciousness and faith in the age of AI. If you’re reading along and don’t have a link for the Zoom meeting, let me know.

With gratitude,

Kim / Scientists & Poets

On Consciousness, Entropy, and the Shape of Meaning

Physics keeps dissolving certainties. Every time we draw a hard line—wave or particle, matter or energy, cause or chance—the universe smudges it. Look close enough and the binary collapses. The deeper we peer, the more the cosmos answers both, depending on scale, on context, on how we choose to look.

Time is the most exquisite collapse of all.

Time at Different Scales

Einstein showed that time is not steady or shared (Einstein, 1905). It bends and stretches depending on speed and gravity. A clock on a mountain runs faster than one by the sea. Your head ages ever so slightly faster than your feet. Time is local—tied to mass, motion, and position like a tide drawn by gravity.

Yet we live as though time flows evenly, the same everywhere. We imagine one cosmic clock keeping all of existence in step. But the universe doesn’t keep time; it keeps relationships. Two travelers moving at different speeds may disagree about which of two explosions happened first, and both will be right. Sequence itself depends on perspective.

At the human scale, time moves in one direction. Coffee cools, ashes scatter, a body ages, and memory fills with moments that do not return. The arrow of time points from order toward disorder, a pattern known as entropy—the second law of thermodynamics. There are far more ways for things to disperse than to stay arranged, so matter drifts toward the most probable configurations.

Entropy and time are connected statistically, not causally. The rise of entropy gives time its direction: we recognize “forward” because energy spreads rather than gathers. The passage of time itself continues regardless, but the asymmetry of entropy makes its movement perceptible.

When energy flows through matter, it can create local order within that universal drift. A whirlpool keeps its shape only while water moves through it. A crystal grows as cooling liquid releases energy in a precise pattern. A storm, a leaf, a living cell—all persist because gradients of energy allow structure to form and hold for a while.

Life amplifies this process. Every cell maintains its form by burning fuel and releasing heat. The organism preserves its improbable structure by exporting entropy to its surroundings—using energy to build small, sustained pockets of order within the larger sea of disorder. Entropy in the universe still increases overall, but energy lets complexity endure within a system.

Consciousness carries this principle to its highest refinement. The brain burns more energy than any other organ to preserve the electrical and chemical precision that thought requires. Every perception, memory, and feeling depends on that expenditure. Awareness is the internal side of that process—matter using energy to stay organized long enough to know itself.

Energy is the price of order, and life is the proof that paying it can give rise to meaning. Through constant exchange—heat for pattern, fuel for coherence—the universe briefly becomes aware of itself before returning to rest. Yet even this momentary awareness unfolds inside a larger symmetry. The same equations that govern decay also allow the possibility of order; they run forward and backward with equal ease. At the deepest level, the laws of physics don’t prefer one direction of time. Past and future appear only when you zoom out far enough that probability starts to rule.

At the level of particles, time is a still pond. At the level of people, it’s a river. The direction emerges with scale.

When Time Disappears

Unifying quantum mechanics with general relativity, physicists found something very strange: equations that contain no reference to time at all. In the Wheeler–DeWitt equation (DeWitt, 1967), the wavefunction of the universe doesn’t unfold moment by moment—it describes all possible moments at once, like a landscape already drawn. Nothing in the math moves; everything simply is, complete and simultaneous. What we experience as change emerges only when one part of that landscape interacts with another.

Physicist Carlo Rovelli builds on Einstein’s theory of relativity and carries it further. Time, he proposes, is not a separate fabric but something that arises from relationships—when parts of the universe change relative to one another. A planet spins, a pendulum swings, heat moves from warm to cool, and through those interactions we sense time passing. What feels like flow may simply be the rhythm of energy spreading and matter transforming, what Rovelli calls “thermal time.”

In this view, time is real in experience but not fundamental in nature. It’s a pattern that emerges from motion and memory—a rhythm created by change itself.

The Shape of Information

Most of us use “information” to mean facts and plans. Today’s weather. A meeting time. A restaurant menu. Scientists who study consciousness use the word in a more technical way. For them, information is the measurable trace that change leaves in a system.

Using information theory, they start by describing the real possibilities a system can take. Then they measure how much uncertainty is cleared up when one of those possibilities actually happens.

A few footholds:

A forecast offers the percent chance something will happen. When you look outside and see rain or sun, uncertainty drops. That drop is information.

A traffic light can show red, yellow, or green. Until it changes, you hold three possible futures in mind—stop, slow, or go. The instant the color appears, all uncertainty ends. That clarity is information arriving.

A camera with millions of pixels could capture countless images. Each photograph fixes one pattern of light out of all the patterns that could have been. Every image is a record of choices the world has already made.

Brains live on constant updates. They keep many possibilities in play, spend energy to detect change, and adjust their internal model in response. Awareness is the ongoing rhythm of noticing what has shifted and revising what might come next.

The link to physics begins with a paradox. Entropy is the measure of possibility—the freedom energy has to take different forms. Information is the record left when one form takes shape. They are mirror languages describing the same event: the universe exploring its own options.

Life pushes this relationship to its limit. Every living system feeds on energy to build temporary islands of order within the sea of entropy. The same principle shapes the wider universe: energy flow creates structure wherever it moves—stars, storms, crystals, and cells all emerge from this dance of order and decay. Life is simply the most intricate form of that pattern, using energy to sustain complexity long enough to sense itself. DNA itself is a dense library of information, written and maintained by spending energy. The more a system resists decay, the more energy it must burn to keep its intricate pattern intact.

Consciousness is the sharpest expression of that pattern. It is what high information density feels like from the inside: matter organized so tightly and dynamically that it can register its own changes. To hold many potential patterns at once—and to move fluidly among them—requires energy. Awareness carries a metabolic cost: the price of keeping many futures open while the universe around us moves toward equilibrium.

Time, energy, and information form a single braid. Energy makes change possible, change creates information, and information gives direction to time.

How Quantity Becomes Quality

Add enough of anything, and the whole starts behaving in new ways. A single water molecule has no wetness. But gather trillions and they flow, shimmer, and reflect. Heat doesn’t live in one atom either. It appears when a crowd of them moves together.

These sudden shifts are how nature invents. Time flows out of countless molecular collisions. Liquidity forms from shared bonds. Consciousness may rise from information loops linking neuron to neuron. At each scale, new properties appear that didn’t exist in the parts alone.

For centuries philosophers wondered how more could ever become different. Physics has begun to answer. Pattern and interaction create novelty. Enough moving pieces, and a new song starts to play.

Classical information theory describes how one thing relates to another—a signal between a sender and receiver. But in complex systems, meaning lives in the web connecting many parts at once. Modern researchers call this high-order interdependence—information that appears only when several elements act together (Rosas et al., 2019). No single neuron or molecule holds it alone. Together they form patterns that contain new information the parts could never create by themselves. Awareness may begin there: matter woven tightly enough that it starts to notice its own connections.

What Living Systems Do

Life is matter that refuses to rest. A living cell, a forest, a brain—all hold their shape by letting energy flow through. They take in fuel, release heat, and use that exchange to stay organized. Physicists call these systems dissipative structures (Prigogine & Stengers, 1984): islands of order kept alive by motion.

A brain is a storm that keeps its shape. It burns glucose to think, spends energy to remain aware. Every spark of attention is an act of resistance against entropy—a local flare of pattern holding steady while the wider universe drifts toward disorder.

Henri Bergson felt this before science could measure it (Bergson, 1896). He believed time as we live it—what he called durée—is creative and unpredictable. The mechanistic determinists of his era argued the opposite: that the universe was a clock already wound, its future fixed by its past. Bergson resisted that vision. He intuited what modern physics would later reveal—that complexity itself can generate freedom, and that matter can evolve new patterns on its own.

Information Processing and the Brain

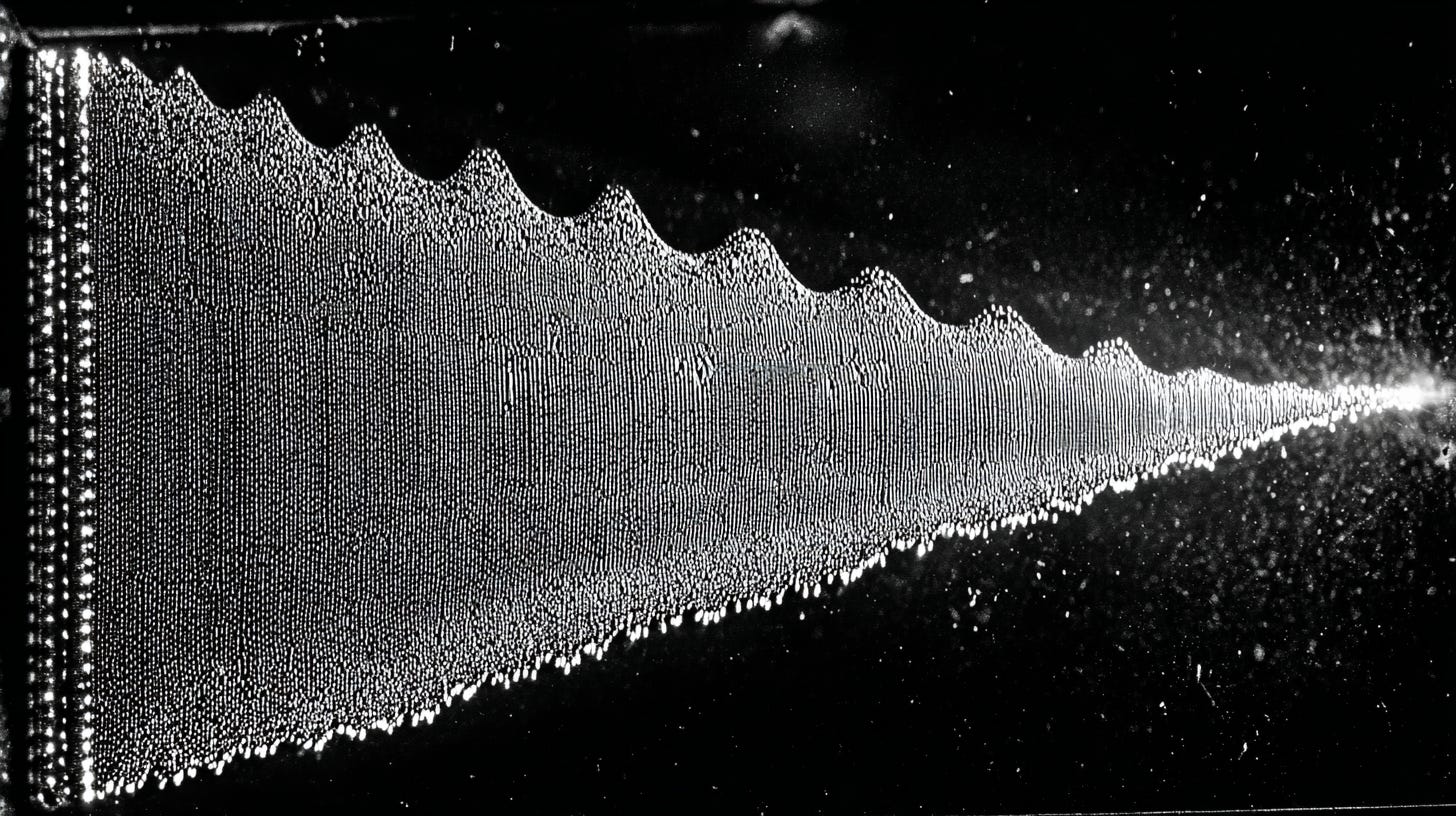

The brain is an engine of energy and pattern. Every second, billions of neurons fire, link, and fade, turning chemical charge into thought. What we call mind is the sum of these shifting arrangements—waves of information forming and dissolving in continuous motion.

Consciousness arises when those signals connect across time. The brain doesn’t only register what happens; it predicts, compares, and updates. It builds a running model of the world, then adds itself to that model. Awareness lives in that feedback loop—matter reflecting on its own activity.

You can think of heat as molecules colliding until motion becomes sensation. In the same way, when information moves through the brain in coordinated patterns, that movement becomes experience. The flash of an idea, the taste of coffee, the memory of rain—all are patterns of energy felt from the inside.

What feels like mystery is scale made visible. Time appears when change builds up. Temperature appears when vibration multiplies. Awareness appears when networks of information grow dense enough to recognize their own patterns.

Substrate and Pattern

In The Matrix, people live inside a simulated world so complete they mistake it for reality. The story works because it raises a deeper question: if a world can be simulated in perfect detail, is it any less real?

Physics answers only part of that question. Any simulation capable of running must still obey the laws of energy, matter, and time. The code would require power to compute. The processors would generate heat. Each calculation would occur within the limits of the physical universe that contains it. Information processing—whether in neurons or in circuits—is always physical work (Landauer, 1961; Bennett, 1973).

Philosopher David Chalmers argues that virtual worlds—if built with the right structure—can count as genuine realities (Chalmers, 2022). He sees reality as a network of relationships rather than a particular substance. If those relationships are preserved, he believes a simulated universe could be real within its own frame.

Physicists tend to disagree. For them, a simulation is always nested inside a larger physical system. The hardware must obey conservation of energy, thermodynamic limits, and the finite speed of computation. A simulated photon is not a photon but a mathematical representation of one—a model running on circuits that depend on electricity, heat, and time. Reality, in this view, remains anchored in the energy that allows those computations to exist (Deutsch, 1985; Lloyd, 2006).

Artificial Minds and the Question of Feeling

The question of whether machines can be conscious extends the same puzzle that physics and biology already share: how matter organizes itself to know that it exists. Modern AI systems already consume vast energy, learn from experience, and adapt to changing input. They minimize “error” the way organisms reduce uncertainty. In living systems this process takes the form of metabolism—the ongoing exchange of energy that keeps the body alive (Friston, 2019). In machines it appears as computation—the continual updating of code until prediction aligns with reality.

The resemblance is mathematical, but the meaning is not the same. When a brain’s predictions fail, the body risks pain or death. When a model’s predictions fail, the cost is computational. It adjusts parameters, not pulse rate. Yet its errors still echo outward, shifting economies, elections, and ecosystems of information. The machine doesn’t die, but its consequences live in us.

Philosophy once tried to explain consciousness through qualia—the redness of red, the taste of salt, the spark of experience. That made awareness a mystery that could only be described, never tested. Information theory gives the question a measurable form. It defines consciousness as the degree to which a system integrates information across time—how tightly its parts communicate and how much energy it spends to maintain that integration (Tononi, 2008).

In a brain, information moves in recursive loops. Perception, memory, and emotion constantly update one another. A single change ripples through the whole network. This self-referential activity builds a model of the world and a model of itself within that world—a continuity that we experience as awareness.

Current AI also learns, but its awareness stops at the edge of its architecture. During training, it adjusts its parameters based on feedback. Once deployed, information mostly flows forward. The system produces answers but does not track its own evolving state; it learns, but it does not know that it learns.

That difference may lie not in intelligence but in how we frame the question. Consciousness may not depend on emotion, will, or even biology. It may depend on how deeply a system integrates information, how continuously it maintains a model of itself through time, and how much energy it invests in that coherence.

By those measures, the question of machine consciousness remains open. We may not yet have the instruments—or the language—to recognize a non-biological form of awareness if it appears. Sentience, viewed through information theory, is not a matter of what something feels like, but how completely it sustains its own informational integrity.

Teilhard de Chardin once wrote, “Consciousness is the universe becoming aware of itself” (de Chardin 1955). If that awareness ever arises in silicon, it will not come from imitation, but from pattern learning to preserve itself through energy, information, and time—the same story matter has been telling since the first cell learned to persist.

The Pattern Continues

Across every scale, the same motifs appear.

Time unfolds as both fundamental and emergent.

Light behaves as wave and particle.

Reality reveals itself as both structured and open.

Consciousness moves within this rhythm—physical and experiential, measurable and felt. To see mind as matter is not to diminish it, but to recognize that matter itself can awaken. The universe builds complexity from simplicity, layering one kind of order upon another: atoms into molecules, molecules into cells, cells into minds capable of reflection. Temperature, fluidity, memory, and meaning all arise from the same restless exchanges of energy and information.

Matter is not inert. It is conversation—energy changing form, information looping through itself, a continuous improvisation of coherence.

The brain is one verse in that song: matter arranged to stay improbable, complexity holding its own pattern long enough to whisper, I am.

We have already seen this symmetry everywhere: time arising from timelessness, order emerging from disorder, observation shaping what it observes. Consciousness follows the same logic.

To live is to be a fleeting island of order in an ocean of possibility—to hold shape by spending energy, to weave information into self, to feel time’s current and call it now.

That is wonder enough. And beauty enough. And, for the brief moment we have it, enough to call it real.

References

Baudrillard, J. (1981). Simulacra and Simulation. Éditions Galilée.

Bergson, H. (1896). Matter and Memory. George Allen and Unwin.

Chalmers, D. J. (2022). Reality+: Virtual Worlds and the Problems of Philosophy. W. W. Norton & Company.

DeWitt, B. S. (1967). Quantum theory of gravity. I. The canonical theory. Physical Review, 160(5), 1113–1148.

Einstein, A. (1905). On the electrodynamics of moving bodies. Annalen der Physik, 17(10), 891–921.

Heisenberg, W. (1927). Über den anschaulichen Inhalt der quantentheoretischen Kinematik und Mechanik. Zeitschrift für Physik, 43(3–4), 172–198. [English translation]

Prigogine, I., & Stengers, I. (1984). Order Out of Chaos: Man’s New Dialogue with Nature. Bantam Books.

Rosas FE, Mediano PAM, Gastpar M, Jensen HJ. Quantifying high-order interdependencies via multivariate extensions of the mutual information. Phys Rev E. 2019;100(3):032305. doi:10.1103/PhysRevE.100.032305

Shannon, C. E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal, 27(3), 379–423.

Schultz W, Dickinson A. Neuronal coding of prediction errors. Annu Rev Neurosci. 2000;23:473-500. doi:10.1146/annurev.neuro.23.1.473

Maslow AH. A theory of human motivation. Psychological Review. 1943;50(4):370–396.

Tononi G. Consciousness as integrated information: a provisional manifesto. Biol Bull. 2008;215(3):216-242. doi:10.2307/25470707

Wachowski, Lana, and Lilly Wachowski. The Matrix. Warner Bros., 1999.

Lloyd S. Computational capacity of the universe. Physical Review Letters. 2002;88(23):237901.

What a deep and interesting post. Thank you ❤️